Fast Index Creation really matters

In one of the recent projects I’ve got involved, I had a situation where I started reviewing the data model so as to find any additional or unnecessary indexes on tables. The scenario is that one where the database was recently moved from MyISAM to InnoDB Storage Engine. So, considering that there are some critical queries that are hanging inside the InnoDB for a long time, I decided to remove some of the redundant indexes from some tables and then re-validate the queries with less indexes options in terms of optimization. To remove indexes, I had an option to do a simple ALTER TABLE … DROP INDEX and use pt-online-schema-change, this last gives a possibility to keep the operation running within the process of removing indexes. This is not a typical operation if we consider MySQL version prior to the 5.5 (or 5.1 + InnoDB Plugin), taking into account that the very first table thought to be a target of the ALTER TABLE was residing in a MySQL 5.0 (traumatic period) and the same table be one of the biggest tables that exists in the schema – 784GB. Now this table resides in the MySQL 5.5, but the MySQL 5.0’s trauma remains on the team members mind.

All the operation was executed very well in terms of the ALTER TABLE execution, it was faster and painless. Often, folks from the customer side wants to be comfortable with the solution about to be applied to avoid them to stay asleep at nights or weekends, I like that as well due to the audit process implied. Btw, the ALTER TABLE that dropped some indexes was executed on the MASTER server and was replicated to 10 slave servers and everything is running well. Avoid problems in modifying tables on slaves. At least on 5.5, I found a problem that was published at bugs.mysql.com and you can check that here (http://bugs.mysql.com/bug.php?id=60784).

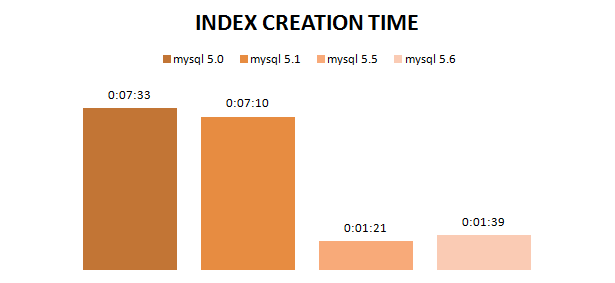

So, all the comments done, the intention of this post is to demo the importance and how faster is to CREATE or DROP a secondary index on InnoDB tables and I wanna compare both versions, 5.0 and 5.5 and as I am planning to migrate all my customers to 5.6, I will provide soon tests considering this last one’s time as well.

Test Scenario

The very first thing to execute on this test to benchmark index creation and exclusion, we need to think of a table with large data and some complicate columns configured with complex data types such as large VARCHAR, TEXT and BLOB. It’s going to give us a complex scenario to deal with indexes on new and old MySQL versions. I would like to caught up your attention that, to add or remove secondary indexes on InnoDB tables from 5.1 + InnoDB Plugin/5.5, a table copy-alter-rename is not needed due to the Fast Index Creation, the opposite of what happens when it’s needed to alter a clustered index column. This is the focus of the tests here and the versions 5.0, 5.1, 5.5, and 5.6 will be part of this small benchmark. I’ve just raised a Vagrant VM with an automating script to setup all the MySQL versions as exhibited below:

[root@mysql56 ~]# find / -name mysqld

/mysql50/bin/mysqld

/mysql56/bin/mysqld

/mysql51/bin/mysqld

/mysql55/bin/mysqld

[root@mysql56 ~]# ls -lh / | grep mysql

drwxr-xr-x 15 mysql mysql 4.0K May 31 01:12 mysql50

drwxr-xr-x 13 mysql mysql 4.0K May 31 00:35 mysql51

drwxr-xr-x 13 mysql mysql 4.0K May 31 01:15 mysql55

drwxr-xr-x 13 mysql mysql 4.0K May 31 00:16 mysql56

[root@mysql56 bin]# /etc/init.d/mysql50 status

MySQL is not running [FAILED]

[root@mysql56 bin]# /etc/init.d/mysql51 status

MySQL is not running, but PID file exists [FAILED]

[root@mysql56 bin]# /etc/init.d/mysql55 status

MySQL is not running, but PID file exists [FAILED]

[root@mysql56 bin]# /etc/init.d/mysql56 status

MySQL is not running, but PID file exists [FAILED]

[root@mysql56 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00

7.7G 7.3G 0 100% /

/dev/sda1 99M 20M 75M 21% /boot

tmpfs 502M 0 502M 0% /dev/shm

/dev/sdb1 7.9G 147M 7.4G 2% /mysql50/datadir

/dev/sdc1 7.9G 147M 7.4G 2% /mysql51/datadir

/dev/sdd1 7.9G 147M 7.4G 2% /mysql55/datadir

/dev/sde1 7.9G 147M 7.4G 2% /mysql56/datadir

1. Create a complex test table and a stored procedure to populate the table:

I’m not sure if this below table is complex enough if we compare this with that large table that we can encounter on company’s databases. Generally, when tables are target of INSERTs, DELETEs and UPDATEs, it’s needed to consider some other factors which the main ones are data fragmentation due to the random access to InnoDB pages and a lack of accuracy related with table statistics – both aspects can be solved with OPTIMIZE TABLE. BTW, the time can be close to that one that will be available here on tests. Let’s create the table and the procedure which will load data in the table.

#

#: creating the database and a table to

#: accommodate data for the tests

#

mysql> CREATE DATABASE wb;

Query OK, 1 row affected (0.00 sec)

mysql> CREATE TABLE wb.tbl01 (

-> a bigint not null auto_increment primary key,

-> b varchar(144) not null,

-> c char(144) not null,

-> d longblob,

-> e longtext

-> ) ENGINE=InnoDB;

Query OK, 0 rows affected (1.68 sec)

# #: stored procedure to load data in the table # mysql> DELIMITER // mysql> CREATE PROCEDURE wb.proc01(IN p_num BIGINT) -> BEGIN -> SET @u_var = 0; -> WHILE @u_var <= p_num DO -> INSERT INTO wb.tbl01 -> SET a=@u_var, -> b=REPEAT(CONCAT(DATE_FORMAT(NOW(),'%d%m%Y%h%m%s'),md5(@u_var)),1), -> c=REPEAT(CONCAT(DATE_FORMAT(NOW(),'%d%m%Y%h%m%s'),md5(@u_var)),1), -> d=REPEAT(CONCAT(DATE_FORMAT(NOW(),'%d%m%Y%h%m%s'),md5(@u_var)),2), -> e=REPEAT(CONCAT(DATE_FORMAT(NOW(),'%d%m%Y%h%m%s'),md5(@u_var)),2); -> SET @u_var = @u_var+1; -> END WHILE; -> END // Query OK, 0 rows affected (0.00 sec)</pre> # #: this is the resultant data after running the procedure above # mysql> select * from wb.tbl01 limit 10\G *************************** 1. row *************************** a: 1 b: 23072014070734c4ca4238a0b923820dcc509a6f75849b c: 23072014070734c4ca4238a0b923820dcc509a6f75849b d: 23072014070734c4ca4238a0b923820dcc509a6f75849b23072014070734c4ca4238a0b923820dcc509a6f75849b e: 23072014070734c4ca4238a0b923820dcc509a6f75849b23072014070734c4ca4238a0b923820dcc509a6f75849b *************************** 2. row *************************** a: 2 b: 23072014070734c81e728d9d4c2f636f067f89cc14862c c: 23072014070734c81e728d9d4c2f636f067f89cc14862c d: 23072014070734c81e728d9d4c2f636f067f89cc14862c23072014070734c81e728d9d4c2f636f067f89cc14862c e: 23072014070734c81e728d9d4c2f636f067f89cc14862c23072014070734c81e728d9d4c2f636f067f89cc14862c *************************** 3. row *************************** a: 3 b: 23072014070734eccbc87e4b5ce2fe28308fd9f2a7baf3 c: 23072014070734eccbc87e4b5ce2fe28308fd9f2a7baf3 d: 23072014070734eccbc87e4b5ce2fe28308fd9f2a7baf323072014070734eccbc87e4b5ce2fe28308fd9f2a7baf3 e: 23072014070734eccbc87e4b5ce2fe28308fd9f2a7baf323072014070734eccbc87e4b5ce2fe28308fd9f2a7baf3 *************************** 4. row *************************** a: 4 b: 23072014070734a87ff679a2f3e71d9181a67b7542122c c: 23072014070734a87ff679a2f3e71d9181a67b7542122c d: 23072014070734a87ff679a2f3e71d9181a67b7542122c23072014070734a87ff679a2f3e71d9181a67b7542122c e: 23072014070734a87ff679a2f3e71d9181a67b7542122c23072014070734a87ff679a2f3e71d9181a67b7542122c *************************** 5. row *************************** a: 5 b: 23072014070734e4da3b7fbbce2345d7772b0674a318d5 c: 23072014070734e4da3b7fbbce2345d7772b0674a318d5 d: 23072014070734e4da3b7fbbce2345d7772b0674a318d523072014070734e4da3b7fbbce2345d7772b0674a318d5 e: 23072014070734e4da3b7fbbce2345d7772b0674a318d523072014070734e4da3b7fbbce2345d7772b0674a318d5 *************************** 6. row *************************** a: 6 b: 230720140707341679091c5a880faf6fb5e6087eb1b2dc c: 230720140707341679091c5a880faf6fb5e6087eb1b2dc d: 230720140707341679091c5a880faf6fb5e6087eb1b2dc230720140707341679091c5a880faf6fb5e6087eb1b2dc e: 230720140707341679091c5a880faf6fb5e6087eb1b2dc230720140707341679091c5a880faf6fb5e6087eb1b2dc *************************** 7. row *************************** a: 7 b: 230720140707348f14e45fceea167a5a36dedd4bea2543 c: 230720140707348f14e45fceea167a5a36dedd4bea2543 d: 230720140707348f14e45fceea167a5a36dedd4bea2543230720140707348f14e45fceea167a5a36dedd4bea2543 e: 230720140707348f14e45fceea167a5a36dedd4bea2543230720140707348f14e45fceea167a5a36dedd4bea2543 *************************** 8. row *************************** a: 8 b: 23072014070734c9f0f895fb98ab9159f51fd0297e236d c: 23072014070734c9f0f895fb98ab9159f51fd0297e236d d: 23072014070734c9f0f895fb98ab9159f51fd0297e236d23072014070734c9f0f895fb98ab9159f51fd0297e236d e: 23072014070734c9f0f895fb98ab9159f51fd0297e236d23072014070734c9f0f895fb98ab9159f51fd0297e236d *************************** 9. row *************************** a: 9 b: 2307201407073445c48cce2e2d7fbdea1afc51c7c6ad26 c: 2307201407073445c48cce2e2d7fbdea1afc51c7c6ad26 d: 2307201407073445c48cce2e2d7fbdea1afc51c7c6ad262307201407073445c48cce2e2d7fbdea1afc51c7c6ad26 e: 2307201407073445c48cce2e2d7fbdea1afc51c7c6ad262307201407073445c48cce2e2d7fbdea1afc51c7c6ad26 *************************** 10. row *************************** a: 10 b: 23072014070734d3d9446802a44259755d38e6d163e820 c: 23072014070734d3d9446802a44259755d38e6d163e820 d: 23072014070734d3d9446802a44259755d38e6d163e82023072014070734d3d9446802a44259755d38e6d163e820 e: 23072014070734d3d9446802a44259755d38e6d163e82023072014070734d3d9446802a44259755d38e6d163e820 10 rows in set (0.00 sec)

After setting up the database, table and the stored procedure, start the procedure to load data into the table we’ll be using to benchmark fast index creation and drop among MySQL versions.

mysql> call wb.proc01(1000000); Query OK, 0 rows affected (7 min 31.18 sec) mysql> select count(*) from wb.tbl01; +----------+ | count(*) | +----------+ | 1000000 | +----------+ 1 row in set (1.72 sec)

2. Create some secondary indexes:

Let’s use the column c as the column to be indexed creating an index called i.

############################################## # #: creating a secondary index on MySQL 5.0 #: Server version: 5.0.91 MySQL Community Server (GPL) # mysql> alter table wb.tbl01 add index i (c); Query OK, 1000000 rows affected (7 min 33.84 sec) Records: 1000000 Duplicates: 0 Warnings: 0 # #: dropping a secondary index on MySQL 5.0 # mysql> alter table wb.tbl01 drop index i; Query OK, 1000000 rows affected (5 min 8.14 sec) Records: 1000000 Duplicates: 0 Warnings: 0

Just registering here that when I started the same procedure to create objects on MySQL 5.1, I found this error message when calling the procedure:

ERROR 1436 (HY000): Thread stack overrun: 8264 bytes used of a 131072 byte stack, and 128000 bytes needed. Use 'mysqld -O thread_stack=#' to specify a bigger stack.

I adjusted the environment variable thread_stack to 192K (it’s max value) and restarted mysqld.

############################################## # #: creating a secondary index on MySQL 5.1.70 #: Server version: 5.1.70 MySQL Community Server (GPL) # mysql> alter table wb.tbl01 add index i (c); Query OK, 1000000 rows affected (7 min 10.73 sec) Records: 1000000 Duplicates: 0 Warnings: 0 # #: dropping a secondary index on MySQL 5.1.70 # mysql> alter table wb.tbl01 drop index i; Query OK, 1000000 rows affected (5 min 12.24 sec) Records: 1000000 Duplicates: 0 Warnings: 0

############################################## # #: creating a secondary index on MySQL 5.5.33 #: Server version: 5.5.33 MySQL Community Server (GPL) # mysql> alter table wb.tbl01 add index i (c); Query OK, 0 rows affected (1 min 21.68 sec) Records: 0 Duplicates: 0 Warnings: 0 # #: dropping a secondary index on MySQL 5.5.33 # mysql> alter table wb.tbl01 drop index i; Query OK, 0 rows affected (0.46 sec) Records: 0 Duplicates: 0 Warnings: 0 ############################################### #: creating a secondary index on MySQL 5.6.17 #: Server version: 5.6.17 MySQL Community Server (GPL) # mysql> alter table wb.tbl01 add index i (c); Query OK, 0 rows affected (1 min 39.08 sec) Records: 0 Duplicates: 0 Warnings: 0 # #: dropping a secondary index on MySQL 5.6.17 # mysql> alter table wb.tbl01 drop index i; Query OK, 0 rows affected (0.42 sec) Records: 0 Duplicates: 0 Warnings: 0

The tests conclusion is that new versions have improved along the time and Fast Index Creation really matters when one is taking with secondary indexes. It enforces the concept of the logic/strategy behind InnoDB that uses Primary Key lookups and then, you must have a PK on all the tables – if you don’t state one, InnoDB will elect one of the columns to a PK or will internally create a ROWID column. Secondary indexes can be changed anytime with faster response from the MySQL, making it easier to add/remove in many cases (imagine you’re working to redesign the database model in terms of indexes).

Another point to expose here is that the on disk data is smaller on newer versions. 5.0 and 5.1 had the same behavior regarding the data size on disk, but, on 5.5 and 5.6 the same amount of data reflected a different global size:

[root@mysql56 mysql56]# df -lh Filesystem Size Used Avail Use% Mounted on /dev/sda1 99M 20M 75M 21% /boot tmpfs 502M 0 502M 0% /dev/shm /dev/sdb1 7.9G 1.3G 6.3G 17% /mysql50/datadir /dev/sdc1 7.9G 1.3G 6.3G 17% /mysql51/datadir /dev/sdd1 7.9G 744M 6.8G 10% /mysql55/datadir /dev/sde1 7.9G 874M 6.7G 12% /mysql56/datadir

Index Creation

Dropping Index

You can follow any responses to this entry through the RSS 2.0 feed. You can leave a response, or trackback from your own site.

Leave a Reply